Cognitive, Systems and Computational Neuroscience

This site provides information about ongoing research in Jack Gallant's cognitive, systems and computational neuroscience lab at UC Berkeley. Here you can find our cool brain viewers, some of our published papers, information about the great people who do the work, our open data, open source code, and tutorials. If you would like to know more about the general philosophy of the lab, please listen to this Freakanomics podcast interview with Jack Gallant or to these OHBM discussions between Peter Bandettini and Jack Gallant [discussion 1] [discussion 2].

We are recruiting postdocs!

We currently have openings for potential postdocs. If you are interested please contact Jack Gallant directly (gallant @ berkeley.edu).

Recent news

New preprint!

Model connectivity: leveraging the power of encoding models to overcome

the limitations of functional connectivity

(Meschke et al., in review).

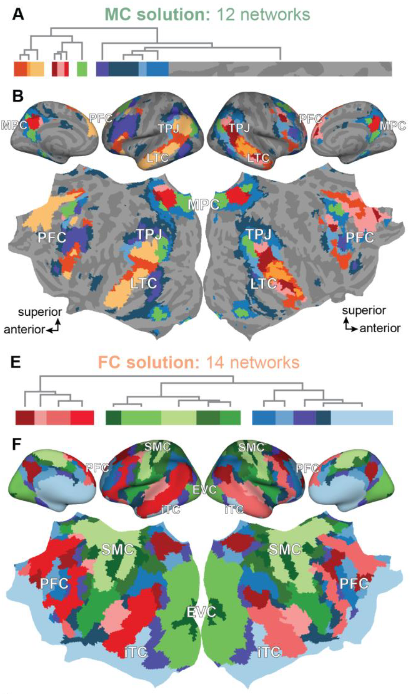

Functional connectivity (FC) is the most popular method for recovering

functional networks of brain areas with fMRI. However, because FC is

defined as temporal correlations in brain activity, FC networks are

inevitably confounded by noise and their function cannot be determined

directly from FC. To overcome these limitations, we have developed model

connectivity (MC). MC is defined as similarities in encoding model weights,

which quantify reliable functional activity in terms of interpretable

stimulus- or task-related features. In this paper we compare these two

methods directly in a language comprehension dataset. We confirm the

confounds of FC, and we show that MC does not suffer from these confounds.

MC recovers more spatially localized networks and it reveals their

functional assignment. MC is powerful tool for recovering the functional

networks that support complex cognitive processes.

New paper!

Phonemic segmentation of narrative speech in human cerebral cortex

(Gong et al., Nature Communications, 2023).

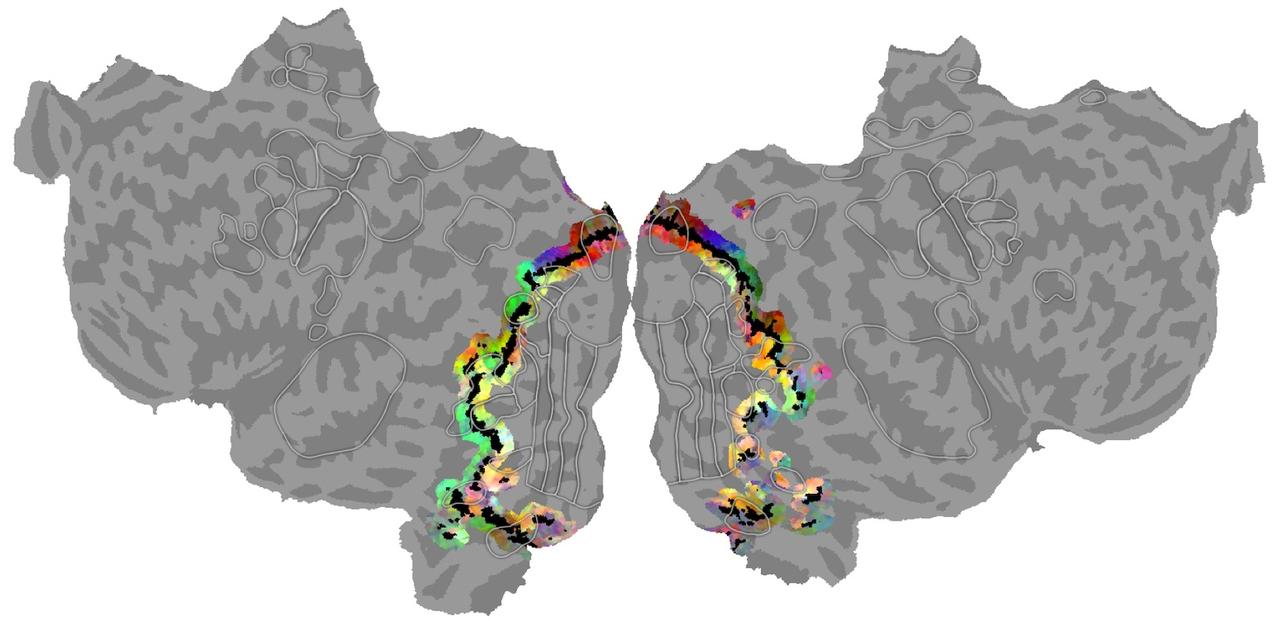

Phonemes are a critical intermediate element of speech. This fMRI study

identifies the brain representation of single phonemes, and of diphones and

triphones. We find that many regions in and around the auditory cortex

represent phonemes. These regions include classical areas in the dorsal

superior temporal gyrus and a larger region in the lateral temporal cortex

(where diphone features appear to be represented). Furthermore, we identify

regions where phonemic processing and lexical retrieval are intertwined.

(Note: this is work done in collaboration with the

Theunissen lab

here at UCB.)

Our (former) senior postdoc, Dr. Fatma Deniz, has accepted

a tenured full Professor position at the Technical University

of Berlin. She began her new position as of April 1, 2023.

Congratulations Professor Deniz! We expect great things from you!

New paper!

Semantic representations during language production are affected by

context (Deniz et al., J. Neuroscience, 2023).

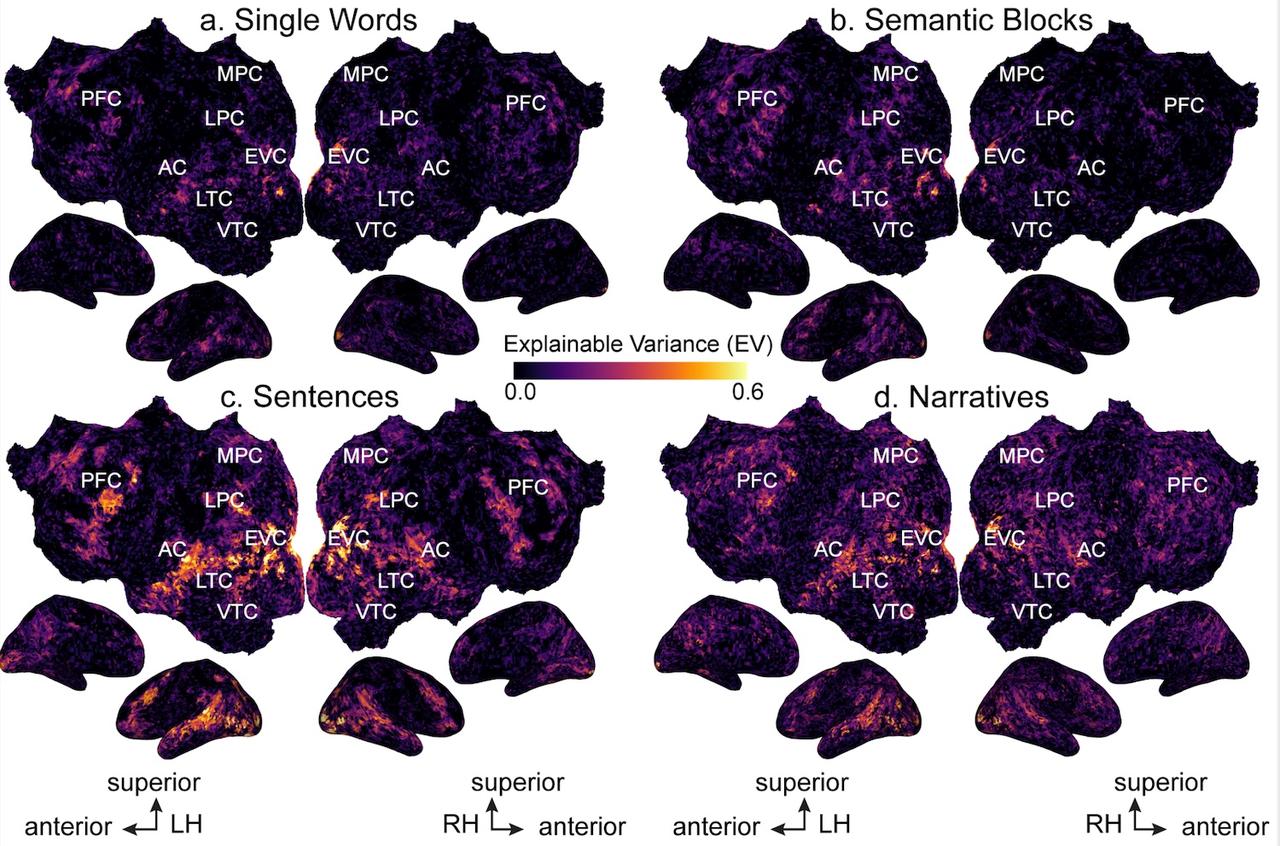

Context is important for understanding the meaning of natural

language, but most neuroimaging language studies use isolated words

and sentences with little context. This study investigates whether

the results of studies that use out-of-context stimuli generalize to

natural language. We find that increasing context improves the

quality of neuroimaging data, and that it changes the representation

of semantic information in the brain. These results suggest that

findings from studies using out-of-context stimuli may not generalize

to natural language used in daily life.

New paper!

Feature-space selection with banded ridge regression

(Dupre la Tour et al., Neuroimage, 2022).

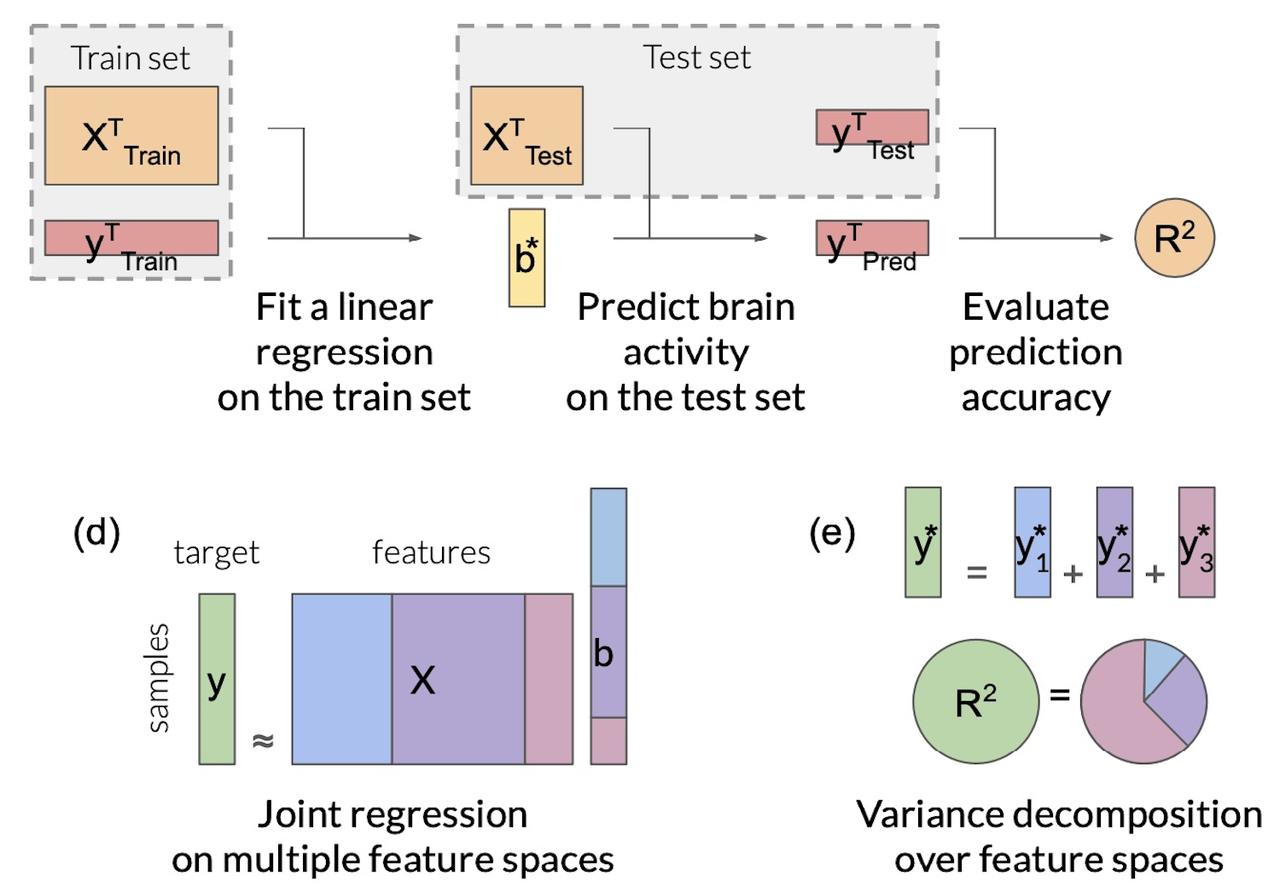

Encoding models identify the information represented in brain

recordings, but fitting multiple models simultaneously presents

several challenges. This paper describes how banded ridge regression

can be used to solve these problems. Furthermore, several methods are

proposed to address the computational challenge of fitting banded

ridge regressions on large numbers of voxels and feature spaces. All

implementations are released in an open-source Python package called

Himalaya.

Christine Tseng has received their PhD! Christine has recently

been working on functional mapping of the self, others, and

social relationships. They will be taking up a postdoctoral

position in the lab while the studies are prepared for publication.

Congratulations Christine!

New paper!

Visual and linguistic semantic representations are aligned at the

border of human visual cortex (Popham et al., Nature Neuroscience, 2021).

The human brain contains functionally and anatomically distinct networks

for representing semantic information in each sensory modality, and a

separate, distributed amodal conceptual network. In this study we

examined the spatial organization of visual and amodal semantic

functional maps. The pattern of semantic selectivity in these two

distinct networks corresponds along the boundary of visual cortex:

for visual categories represented posterior to the boundary, the

same categories are represented linguistically on the anterior side.

These results suggest that these two networks are smoothly joined

to form one contiguous map.